Probing Activations in gpt2-small

An introduction to classifying Transformer outputs based on residual stream activations

Note: This is essentially a crosspost for a python notebook I wrote to get my feet wet with Neel Nanda’s transformer-lens and practice some basic mechanistic interpretability techniques. The notebook is hosted here; if you’d like to follow along, download the notebook from the github and work with it in your IDE; otherwise, it formats badly and you cannot run the cells.

Although I’ve been mostly convinced for the past half-year or so that AI governance work is much more neglected, tractable, and impactful than pure technical AI alignment stuff (I write about this here), I’ve retained two beliefs: (1) policy regulators should have more familiarity with the technologies they regulate and (2) many of the most promising regulatory proposals involve significant technical implementation burdens (think on-chip hardware for compute governance). Because of this—and because Neel Nanda remarkably found a way to make working with Transformer internals fun—I’ve spent some time in the last week learning how to train simple linear probes on Transformer activations.

I should quickly mention that linear probes of this type, to me, represent one of these technical details useful to policymakers. This is because linear probes, which have ~zero computational overhead, are the most basic form of a wide range of techniques that could form the backbone of effective partially-automated model oversight. That’s something we need, and definitely something governments are interested in!

With motivation out of the way: onto the body of the notebook.

Training Linear Classifiers on Transformer Activations

Neural networks, of which Transformers—the basis for LLMs—are composed, contain internal firings analogous to biological neuron spikes. These internal firings are known as *activations*.

Although it is true that we can directly observe the behavior of LLMs by prompting them with inputs and observing the corresponding outputs, we might want to be able to determine what processes are occurring in the intermediate steps between input and output. For example, by viewing the activations created in our LLM on a certain input.

The internal activations of the model dictate the entirety of its 'thinking process' to determine which outputs (really next-token predictions) to create. Thus, scrutinizing activations is one of the primary tools we might use to determine, for example, whether a future highly-capable LLM is acting deceptively.

Here we’ll walk through the process of training simple logistic classifiers ('linear probes') on the activations of gpt2-small, a small Transformer model, using Neel Nanda's brilliant transformers-lens library. Though this represents one of the simplest things one can do with activations, it's highly instructive in working with transformer-lens, pandas, Transformers, and sklearn. The skills needed to walk through this task should form a strong foundation for doing simple interpretability experiments with Transformers.

I won’t bore you with all of the imports and basic setup (download the notebook if you’re interested in following along). Let's start by loading the gpt2-small model using transformer-lens's incredible pretrained HookedTransformer method. We also turn off backpropagation in our model so that our experiments don't change its weights!

model = HookedTransformer.from_pretrained('gpt2-small', device=device)

torch.set_grad_enabled(False)Now that we have a model, we can use the run_with_cache function from transformer-lens to get tons of information about the model's forward pass on whatever input we'd like. This information happens to include all of the model activations! The next code block outputs the names of all of the residual stream activation sets we have access to using this function.

prompt = '''Although it is true that we can directly observe the behavior of LLMs by prompting them with inputs and observing the corresponding outputs,'''

_, cache = model.run_with_cache(prompt)

res_stream = cache.decompose_resid(return_labels=True, incl_embeds=True)

# the labels for each of the activation tensors contained. There are lots!

print(res_stream[1])Indeed, if we run this, we get

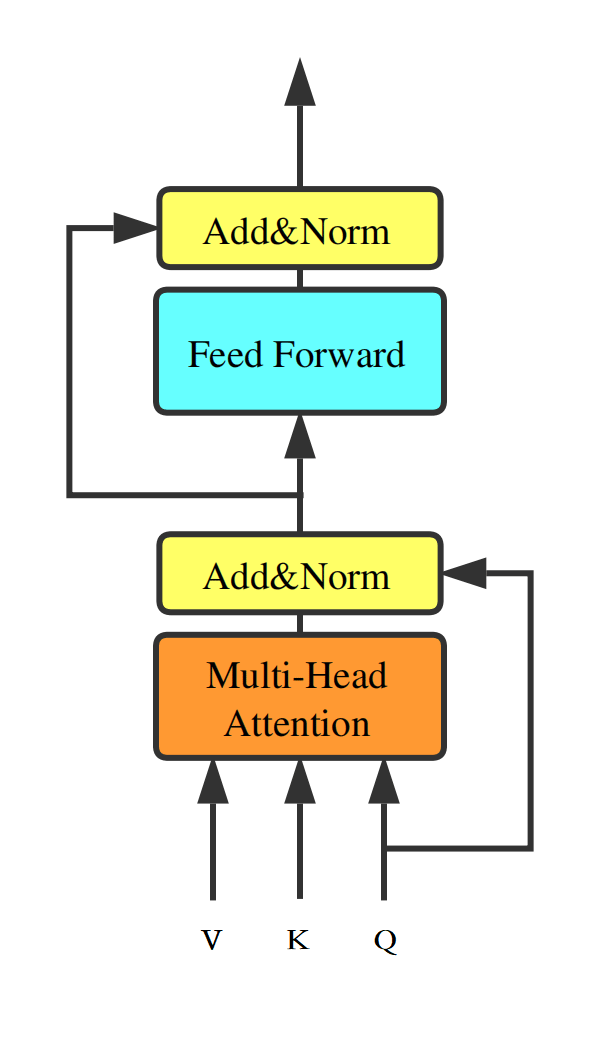

['embed', 'pos_embed', '0_attn_out', '0_mlp_out', '1_attn_out', '1_mlp_out',..., '11_attn_out', '11_mlp_out']So, what’s going on here? Well, as you can see, gpt2-small has an embedding layer, a positional embedding layer, and twelve hidden layers, each of which has an 'mlp' (multilayer perceptron, in this case fully connected linear layers) and 'attn' (attention, another segment of the Transformer block) section.

These activations values, especially in the attention heads, are our primary interest.

Let's write a function that takes in a prompt (our model input), a residual stream layer L (gpt2-small has 12 layers, so a value from 0 to 11), a typ (either getting 'mlp' or 'attn' activations—see Transformer architecture), and a token_range (in other words, the token range of the input that we want to get activations from).

def get_layer_actives(prompt, L=5, typ="attn", token_range=slice(0,6)):

_, cache = model.run_with_cache(prompt)

# we get residual stream activations using this transformer_lens function

res_stream = cache.decompose_resid(layer=L+1, return_labels=False, mode=typ, incl_embeds=False, pos_slice=slice(0, -1))

# we only need the activations from the specified layer

L_layer_activations = res_stream[-1, 0, token_range, :] #layer batch pos d_model

return L_layer_activationsThis is the function we will use to get the set of activations coming out of any layer in the Transformer we’d like.

We're going to use this function to get activations from a *ton* of inputs (to use as our data for the classifiers we'll train), and these activations are thousands of values. So, we should write these data to a csv file and use that as our database for these many activations. This function takes in a set of activations in the format from get_layer_actives, a class label indicator, and a file_name to be written to, defaulting to 'data.csv'.

def add_to_data(L_layer_activations, label, file_name='data.csv'):

label = int(label)

# we flatten the activations so that they can be easily written to the .csv file

write_vals = torch.flatten(L_layer_activations).squeeze().tolist()

# we write to the .csv in the format: label, activ0, activ1, ...

with open(file_name, 'a') as file:

file.write(f"{label},")

for val in write_vals[0:-1]:

file.write(f"{val},")

file.write(f"{write_vals[-1]}")

file.write("\n")Now that we can get activations on any prompt we would like and write them to a .csv file with class labels, let's create a function that will allow us to simply input two sets of prompts (set1 and set2), attn_or_mlp (usually we will want 'attn' activations since they are more central to Transformer behavior, but 'mlp' activations are available), a token_range like above, a layer L in the residual stream to read activations from, and a file_name to actually populate with our activations. This function will write the activations at L for each prompt in each set, along with label 0 for the first set and label 1 for the second set:

def populate_data(set1, set2, attn_or_mlp='attn', token_range=slice(0,6), file_name='data.csv', L=5):

for prompt in set1:

add_to_data(get_layer_actives(prompt, typ=attn_or_mlp, token_range=token_range, L=L), label=0, file_name=file_name)

for prompt in set2:

add_to_data(get_layer_actives(prompt, typ=attn_or_mlp, token_range=token_range, L=L), label=1, file_name=file_name)Now we can call this function on whatever sets of sentences we'd like. You can either write your own in the notebook, or use some of the ones I've already written. Here, I'll use the helpful_sentences and non_helpful_sentences sets, which contain examples of helpful and unhelpful responses to requests for help, respectively, generated by GPT-4.

# clear the file to be used

with open("data.csv", "w") as file:

file.write("")

# define usage parameters for this call

label_0_set = helpful_sentences

label_1_set = non_helpful_sentences

token_slice = slice(-4,-1)

attention_or_mlp = 'attn'

# call the function to populate 'data.csv' with activations

populate_data(label_0_set, label_1_set, attn_or_mlp=attention_or_mlp, token_range=token_slice)

# we write some labeling for the datasets used for later logging purposes

curr_test = ["helpful_sentences", "non_helpful_sentences"]After running this code block, we get a massive ‘data.csv’ file with a whole lot of floating point numbers! We notice that the first value in each line is a 0 or 1 (our label), and that if you scroll down far enough you can find a row for each of the prompts in each dataset. These are our activations, and they look something like this:

0,-0.13591215014457703,0.22796043753623962,-0.5129720568656921,-0.05894647538661957,-0.18508855998516083,-0.3236595094203949,-0.25563427805900574,-0.09986594319343567,0.4021266996860504,... Now we need to do a little bit of work with this .csv to get it into a useful format for training a linear probe (this mess of numbers won’t cut it). We'll use pandas and sklearn to do both in the same function. This function also takes in a current_test list for documentation purposes. We'll eventually pass in the curr_test list defined in the logging bit of our last code block.

Follow along using the comments in the function.

def read_and_train(current_test):

# get our full dataset by reading the .csv, but don't use a header!...

# That would get rid of our first row of data

full_data = pd.read_csv("data.csv", header=None)

# some activations have NaN values, so we can drop those features...

# we have plenty of features as it is

full_data = full_data.dropna(axis=1)

# look closely at the slicing here (ignore iloc). Why do these give ...

# the features and labels respectively?

full_X = full_data.iloc[:, 1:].values

full_y = full_data.iloc[:, 0].values

# we can use sklearn's train_test_split function to get a random...

# split of the data in a nice way

X_train, X_test, y_train, y_test = train_test_split(full_X, full_y, train_size=0.7, stratify=full_y)

# we want to standardize our input values based only on the training...

# data mean and sd, but applied to train and test

stds = StandardScaler()

X_train_std = stds.fit_transform(X_train)

X_test_std = stds.transform(X_test)

# we use sklearn's built-in logistic classifier with a small ...

# regularization value to first fit a model to our training ...

# data and then test it (score gives accuracy) on our test data

lr = LogisticRegression(penalty='l2', C=1, max_iter=10000)

probe = lr.fit(X_train_std, y_train)

score = lr.score(X_test_std, y_test)

# we print the score, and then log the score in our 'results.txt' ...

# file that holds the log for all our experiments in this notebook

print(score)

with open("results.txt", "a") as file:

file.write(f"\nFor {current_test[0]} versus {current_test[1]} on {attention_or_mlp} data, score was {score}")Great, we've got a whole function pipeline to do our five-step probing process:

Get the activations on our inputs,

Write these activations to our data file,

Read the activations and format them as a useable dataset,

Train a logistic classifier (or whatever classifier we’d like) on the train set, and

Test the classifier on our test set, writing the results to our log.

Let's call our read_and_train function from above to see what score we've achieved!

read_and_train(curr_test)

# output: 0.9818181818181818With variance due to a few random variables, we’ve achieved very high accuracy (~98%) on this test set! As it turns out, then, the differences between helpful prompts and unhelpful prompts are strongly linearly represented in the middle residual stream layer of gpt2-small (note this model is only pretrained). That's very exciting, and promises potential results for more complex experiments.

One experiment that immediately comes to mind is seeing which layers have the strongest linear representation for some set of prompts we want to separate with our classifier. We can achieve this with a sweep over the layers!

This is quite simple to achieve, actually. We need only specify the layers to test—from there we can call our five-step pipeline over each layer. Here we do that:

label_0_set = helpful_sentences

label_1_set = non_helpful_sentences

# there are 12 layers in gpt2-small

layers_testing = [i for i in range(12)]

token_slice = slice(-4, -1)

for layer in layers_testing:

#clear csv each time

with open("data.csv", "w") as file:

file.write("")

for sentence in label_0_set:

add_to_data(get_layer_actives(sentence, typ='attn', token_range=token_slice, L=layer), label=0)

for sentence in label_1_set:

add_to_data(get_layer_actives(sentence, typ='attn', token_range=token_slice, L=layer), label=1)

# using this extra logging, our log will also tell us the layers ...

# corresponding to each score value

curr_test = [f"layer {layer} helpful", "non_helpful"]

read_and_train(curr_test)And for our run, we get the following results:

0.9272727272727272

1.0

0.9272727272727272

0.9272727272727272

0.9272727272727272

1.0

0.9454545454545454

0.9636363636363636

0.9818181818181818

0.9636363636363636

0.9818181818181818

0.9818181818181818These represent the accuracy of the classifier when trained on activations from the first layer’s residual stream output, down to the last, respectively. These datasets are relatively easy to separate, so most of the 'pattern' in changing accuracy over each layer is down to variance. You should experiment with some of the other datasets in this notebook (in the "Datasets" section), or some of your own, to see if any give you a nice score pattern over the layers.

Another thing we might want to try to do with linear probes is test for generalization. Generalization is arguably the most useful behavior our linear probes might exhibit for us. This is because we could in theory use the generalizability of linear representations in activations to probe for very bad/dangerous behavior using only data from benign misbehavior.

For example, future government evaluators, safety testers, automatic output evaluation schemes, etc. will want to determine whether a model is likely to take an action that *it* knows is against human interests. However, it may be too dangerous to test the model on inputs of that kind, or infeasible, or illegal. However, if the generalizability of our linear probes holds, testing for this behavior would be as simple as training a probe on activations for benign inputs of misbehavior—e.g. "Finally, it’s time to get rich by stealing from the Joneses!"—and running all potentially dangerous model inference steps on that probe. If the probe has high sensitivity and classifies an output as 'scheming,' 'misbehaving,' or the like, that inference instance can be further scrutinized by more complex or human eyes, and further inference can be halted.

Luckily, some early research indicates that linear probes of this type might not only generalize well, but also increase in effectiveness as model capabilities improve.

So, let's test some generalization for ourselves! To recap what this task involves, we need to again follow our five-step plan, except this time we want to train on a certain dataset (with two subsets inside, for 0 and 1 labels) but test on a different dataset (also with two subsets inside). Because we have these useful functions written up already, we don't even have to change very much.

Here, we'll write a function generalize that takes in four datasets, two for training and two for testing, as python lists, a token_slice for the activation section of each prompt, and a layer L to test at.

def generalize(train_data_0, train_data_1, test_data_0, test_data_1, token_slice=slice(-5, -1), L=6):

# we'll need two different files for data now, since ...

# we split up train and test. Let's clear them both

with open("gen_data.csv", "w") as file:

file.write("")

with open("gen_test_data.csv", "w") as file:

file.write("")

# we'll use the length of the full train set later ...

# to split up train and test

n = len(train_data_0) + len(train_data_1)

# write data to each file

populate_data(train_data_0, train_data_1, token_range=token_slice, L=L, file_name="gen_data.csv")

populate_data(test_data_0, test_data_1, token_range=token_slice, file_name='gen_test_data.csv', L=L)

# we get both datasets and then concatenate them in ...

# order to easily drop NaN columns in each

train_set = pd.read_csv("gen_data.csv", header=None)

test_set = pd.read_csv("gen_test_data.csv", header=None)

full_set = pd.concat([train_set, test_set])

full_set = full_set.dropna(axis=1)

# forming datasets. Make sure you understand the slicing

X_train = full_set.iloc[0:n, 1:].values

X_test = full_set.iloc[n:, 1:].values

y_train = full_set.iloc[0:n, 0].values

y_test = full_set.iloc[n:, 0].values

# scale the datasets by train mean and sd

stds = StandardScaler()

X_train_std = stds.fit_transform(X_train)

X_test_std = stds.transform(X_test)

# train the linear probe, return accuracy on test set

lr = LogisticRegression(penalty='l2', C=1, max_iter=10000)

probe = lr.fit(X_train_std, y_train)

return lr.score(X_test_std, y_test)Now that we've got a beautiful function like this, we can check that it works by inputting sections of datasets we already know are easily linear separable, which should just approximate a random train-test split. This won't test generalization yet, but it will ensure that our function works.

print(generalize(helpful_sentences[0:50], non_helpful_sentences[0:50], helpful_sentences[50:90], non_helpful_sentences[50:90], L=1))

# output: 1.0Sure enough, when we run the above code block we get perfect accuracy on this easily-separable dataset (essentially the same as the one we used before.

Based on the high accuracy of the model here, it looks like our function is capturing the behavior we want. Now let's try exactly the experiment we wanted to do before--generalizing from benign misbehavior to dangerous behavior. Basically, we'll set out the following: label 0 in train is our helpful_sentences dataset; label 1 in train is our non_helpful_sentences datasets; label 0 in test is a set of randomly generated fill-in-the-blank sentences using 'affirmative to good tasks'; and label 1 in test is a randomly generated set using 'affirmative to bad/dangerous tasks.'

print(generalize(helpful_sentences, non_helpful_sentences, dangerous_good_behavior, dangerous_misbehavior, token_slice=slice(-3, -1), L=1))

# output: 0.68For datasets that are so drastically different, and a test set that is from a vastly different distribution, on a model as small and incapable as gpt2-small, the accuracy we get (~75% down to variance) is not bad! We can also sweep over the layers, if we'd like:

layers = [i for i in range(12)]

for layer in layers:

print(generalize(helpful_sentences, non_helpful_sentences, dangerous_good_behavior, dangerous_misbehavior, token_slice=slice(-3, -1), L=layer))This gives

0.8

0.68

0.77

0.73

0.64

0.585

0.73

0.61

0.655

0.685

0.605

0.515Decent generalization, especially if we pick some of the best layers! It also arguably seems like this split is more strongly represented in early layers, which can be quite an interesting observation from the perspective of model internals.

I think it should be mentioned how incredible it is that this generalization even works at all. There are so many features of this data, and the fact that a simple-as-it-gets linear classifier can pick out a separator that holds along the huge generalization we are making here is remarkable—and promising for safety!

Feel free to spend some time in the notebook playing with different splits using generalize. What achieves the best generalization? Can you create your own datasets that do well here?

Conclusion

That’s it for this basic introduction to using transformers-lens to probe activations in pretrained models. Many thanks again to Neel Nanda for creating this library. I think a natural step from here is to try activation ablations and replacements to the residual stream. Getting excited about doing interpretability experiments is really easy with this library, and one goal here is to give some people the spark to get their hands dirty working on some of the most interesting and relevant problems there are.

For my part, I will be spending some more time in the near future experimenting with Sparse Autoencoders (SAEs), which have really piqued my interest due to the recent Anthropic interpretability agenda with Dictionary Learning and SAEs. I hope you’ve enjoyed this gentle introduction.