Mutual Verification for Competing National AI Projects

How verification methods can reduce competitive pressures on nations racing to transformative AI

This is the third post in what roughly amounts to a series on government nationalization of AI development. The first was a general introduction to nationalization for AI. The second tried to estimate the probability of such a nationalization and teased some implications. In this post, I explore verification methods and their implications for international coordination.

Suppose the US and China nationalize AI development and begin to race towards general AI systems that can transform their economies and militaries. In such a scenario, the first to cross some finish line of automation or intelligence may achieve an unprecedented geopolitical advantage. Such a race mirrors current private-sector development. And it brings along all its loose, untidy baggage, too.

The leading developers, in this case the US and China, have huge incentives to throw caution to the wind. In particular, they are likely to underrate AI’s catastrophic safety problems, skimp on safety research and evaluation, and make irresponsible go-ahead decisions. All of this to reduce the chance of falling behind.

What if there were a way to beat the odds and develop responsibly, even in a race as high-stakes and blatantly adversarial as this one?

Proponents of mutual verification argue that it provides a way out. The argument goes something like this.

The Argument for Mutual Verification

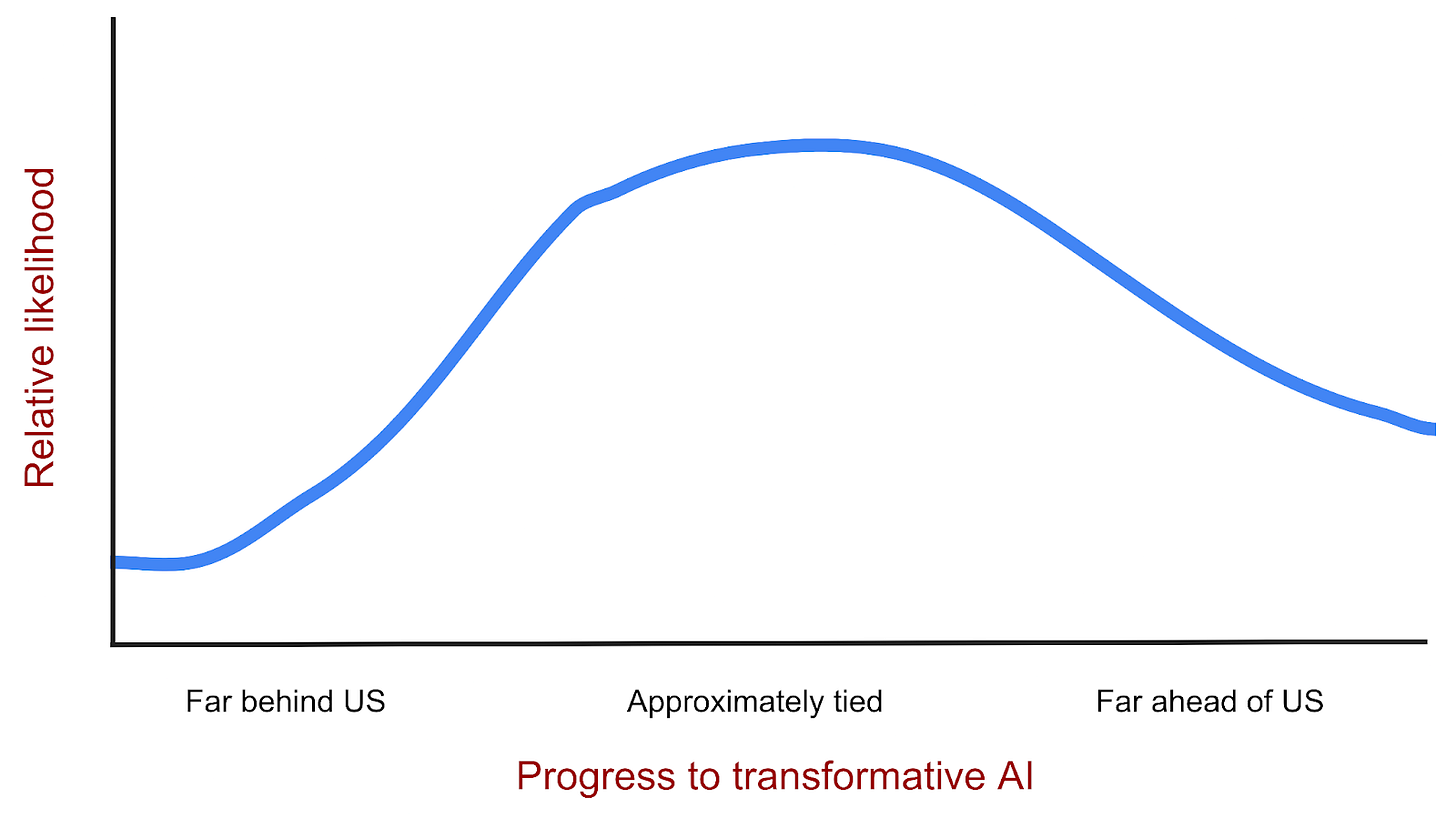

Developers in a first-to-the-line technological development race can’t adequately handle dangerous side effects because they’re always so damn worried about falling behind. It’s like a footrace between the US and China. Except every time the US looks to the next lane to see where China is, all it sees is a wide probability distribution like this:

This is a scary situation for a state! Things are blurry. There is a plausible chance that the adversary has made far more progress than they have. If that turned out to be true, the American century would surely be in its twilight hour. The US could be on the precipice of losing the most important technological race in history. An unacceptable outcome, especially for a nation that understands the implication of losing this race: annihilation or relegation to geopolitical irrelevance.

Yet, the situation is symmetrical. China looks to its right and sees in the other lane the same distribution. Both see an unacceptably high chance that their adversary is far ahead! The consequences of this possibility are so worrisome that each will do any number of irresponsible things to prevent losing: safety skimping, unprotected training runs, reckless deployment,... war?

The US and China could in theory reduce the probability of being far behind to an acceptable level by agreeing, via a ratified international treaty, to certain development limitations. For example, they could agree to a compute use limitation for large training runs. However, under the status quo, verification of adherence to such a treaty looks impossible. Today, we can hardly tell how many AI chips China smuggles or purchases, much less in which data centers they are installed. Forget trying to determine what the chips are being used for. If adherence cannot be observed and verified—and states will have compelling reasons to skirt adherence undetected—the likelihood of being far behind remains unacceptably high.

However, suppose this treaty also stipulated and provided effective, high-fidelity verification methods to each state. In this case, treaty terms such as “no development beyond this point until X, Y, and Z conditions are met” can work. Call this the “treaty point.” With an agreement like that, when the US looks over its shoulder, it sees China like this:

There’s still some chance that the adversary is ducking the treaty since verification evasion is always possible. But it’s unlikely enough that we’ve reached an acceptable level of “far behind” risk. Now there exists a mechanism through which each state’s development project can proceed at a pace slower than “every delay risks annihilation.” Given the right treaty conditions, this could allow for significant investment into safe, rather than expedient, development. Perhaps, for example, a treaty could include “cooldown” periods without large training runs for developers to focus on safety.

Such a mutual verification setup enables competing nations to agree on terms of development. An agreement of this sort in turn decreases the likelihood that anyone stumbles into an AI catastrophe or makes a call to go-ahead when one seems possible. The big question mark is whether mutual verification is possible.

Pathways to Mutual Verification

In the late 1960s, it became clear that investment in anti-ballistic missile technology might reap a successful missile defense system, therefore endangering the ghastly protection of mutually assured destruction. Faced with that “stark menace of nuclear war,”1 the world’s two superpowers begrudglingly convened for strategic arms limitations talks (SALT). SALT I, the agreement signed in 1972 on this basis, limited the number and character of missile interceptors on each side and was “the first time during the Cold War” that “the United States and Soviet Union had agreed to limit the number of nuclear missiles in their arsenals. SALT I is considered the crowning achievement of the Nixon-Kissinger strategy of détente.”2

The superpowers initiated attempts to expand on SALT in 1974 in response to additional, rapid, and unpredictable changes to the capabilities of their arsenals (think: emergent capabilities). However, these negotiations, known as SALT II, broke down in part due to disagreements over the verification methods to be used in enforcing the treaty.

At the time, so-called “National Technical Means” (NTM) were used for verification. During the SALT era, this mostly included telemetry, satellite imagery, and aerial reconnaissance. The scruples over verification that arose during SALT II indicate that these NTM were sufficient to detect construction of large-scale missile interception systems, but not all of the complex ICBM, SLBM, and MIRV research going on by the mid 70s.

In many ways, detection of missile development should be easier than AI development. For one thing, chips are small while missile technologies and their accompanying baggage trains of vehicles, propellants, manufacturing, etc. are large. In addition, AI is significantly more dual-use than nuclear. Meta, for example, owns a massive share of the world’s cutting-edge AI compute. But it uses the vast majority of it to do large-scale inference to power the recommendation and advertising features of Facebook and Instagram—hardly a national security-relevant application. Nuclear power generation, on the other hand, is well-documented, observable, and relatively unpopular (only a very small fraction of annual added generating capacity is nuclear).

However, some prior work has explored pathways to reliable mutual verification. As I see it, there are three major camps (which could be pursued independently or in tandem):

National intelligence and technical means;

National or international inspections or monitoring; and

On-chip hardware mechanisms.

Each comes with its own set of feasibility, resource, and effectiveness qualities.

National intelligence and technical means

This method of mutual verification essentially relies on the well-established and -resourced existing national security and intelligence apparatuses of the US and China. How does the US know that China’s military budget is 1.5-3x larger than claimed? National intelligence and technical means.

This camp proposes targeting and expanding these capabilities for detecting the progress of the adversary nation’s AI project. Concrete methods include satellite and aerial imagery, global telemetry data, radar, seismography, EMP signatures (energy detection), and undersea acoustics. During the Cuban Missile Crisis, for example, US analysts used satellite and aerial imagery to identify missile shipments to and emplacements on the island.3

These methods might also include standard espionage and cyberintelligence efforts.

I can’t say with authority whether a focused attempt by the national security establishments of the US and China could reliably trace each other’s progress on AI development. I will say that it’s difficult to imagine this strategy consistently uncovering evidence decisive enough to rule out a dedicated program of deception. It scores relatively low on effectiveness.

In terms of feasibility and likelihood, this option scores highest. The US already tasks some of its intelligence services with tracking emerging technologies in China. And, were a race to materialize, a targeted campaign would surely ensue by default. The necessary resources are mostly already appropriated and additional funding would hardly be a challenge once the national security implications of AI are illustrated to Congress. We might see something analogous to the expansion of counterterrorist intelligence dictated by the Patriot Act following 9/11.

One major unique benefit of these national means is that they can be applied unilaterally. This may allow a state that takes a decisive lead to confidently slow down development for safety reasons. In the case of a lead, the trailing actor may be significantly less likely to collaborate on the other methods. This camp also may benefit significantly from AI assistance (e.g., automated imagery analysis), meaning an actor with a lead could become increasingly confident that it is ahead.

National or international inspections and monitoring

Competing development actors could in theory agree to allow adversary or independent inspectors into relevant physical locations or grant access to relevant digital assets. This could be considered analogous, for instance, to the Joint Comprehensive Plan of Action’s authorization of the International Atomic Energy Agency (IAEA) to audit Iran’s nuclear programs to prevent weapons proliferation. The essential factor is an international, independent organization (the IAEA) with transparent inspection authority over Iran’s “entire nuclear supply chain.”4

There are a number of reasons a direct analog would not work in the AI case. For one, full transparency would involve knowledge of secret algorithmic, design, and strategy components of the project. No state would (or should) be willing to give these up. In addition, it’s hardly reasonable to assume that inspectors could not easily be deceived by receiving access to façades of the project. Nevertheless, independent inspections may be a reliable way to referee a treaty if true access can be guaranteed. Since there may not exist relevant actors outside of the competing nations, non-independent inspectors may be preferred.

This is a middle option on feasibility. Inspections of this type have clear precedent in a way that on-chip hardware verification does not, but would require significant coordination and legislation over and above national intelligence expansion. It’s not entirely clear how resource-intensive this method would be. Expertise may be an issue, given the development projects are likely to have already centralized the vast majority of qualified researchers and engineers and tied them to the project with anchoring incentives. Demonstrating clearly that the inspected sites and assets are the true state-of-the-art within a project may also require significant resources.

This method also has high variance on effectiveness. If executed well, states could achieve high confidence that their adversary has complied with an agreement. However, it seems difficult to achieve this level of confidence through inspections alone. Perhaps inspections are best deployed as a complement to national intelligence expansion.

On-chip hardware mechanisms

The most explored option in the AI safety community has been on-chip hardware mechanisms. Proposals of this type, presented in “Secure, Governable Chips” and “Hardware-Enabled Governance Mechanisms,” involve using specific modules installed on frontier AI chips to monitor and verify compliance with treaties. These excellent reports explain how such mechanisms could be used to track AI training locations, scale, content, and further aspects of development. When paired with clever software solutions, these mechanisms could drastically decrease the cost and uncertainty involved in verification.

On-chip hardware mechanisms are about as close as it gets to an absolute, comprehensive guarantee of mutual verification. Were all the frontier AI chips produced in the US and China to be installed with the appropriate modules and permissions, evasion would be difficult. Additionally, this option gives the most fine-grained insight into progress without requiring either actor to release secret information. Although evasion is possible through domestic production of non-registered chips, these activities are relatively visible through national technical means.

It must be noted that on-chip hardware mechanisms of this kind, though not entirely unprecedented, would require at least a few years of research and development (and chip production) to come online. This presents a huge obstacle to feasibility, since there is likely to be a short window in which negotiators are willing to agree on terms, during which verification methods must be immediately available. Whether this is reason to reject on-chip mechanisms or start research and production on them now is an open question.

Although this option requires large-scale production and rollout, it’s likely not a particularly costly solution. The involved governments, which exert massive influence over the AI chip supply chain, could simply require all relevant chips be produced with these mechanisms—or order designs of this type for the chips they were likely to purchase regardless.

On-chip hardware mechanisms, if implemented across the board, would provide the most effective guarantee of mutual verification. However, this solution is significantly less precedented than the others and therefore less likely, at least by default, to be pursued.

Conclusion

If development nationalization occurs, an all-out race to transformative AI is likely to end in catastrophe. Competing national projects are likely to recklessly develop due to uncertainty about the opposing actor’s progress. This is especially true in cases where the race is, or appears to be, close. In cases like these, mutual verification—or the ability of each actor to semi-transparently see how much progress the other has made towards its goal—may provide a way to reintroduce safety incentives to the race. This is possible because compliance with the terms of a development treaty can be observed and verified by each actor, allowing for (at least limited) coordination.

Three pathways to mutual verification are particularly promising:

National intelligence and technical means. Focusing the national intelligence establishment of each nation on determining the other’s AI progress may be an effective way to gain insight. This looks like a robustly good (though perhaps not adequate) intervention that is likely to happen by default.

National or international inspections and monitoring. A site inspection model, perhaps modeled after the Iran nuclear deal, may provide mutual insight.

On-chip hardware mechanisms. The comprehensive proliferation of security modules on datacenter AI chips would virtually guarantee reliable mutual verification, though this solution seems unlikely to be implemented.

These strategies should likely be purused in tandem so that negotiators have as many open avenues as possible when competing actors can be brought to the table.

The most pressing remaining question is whether and which agreement terms can facilitate the necessary investments in safety. In this post I floated a few options, such as cooldown periods on large training runs, but there seems to be plenty of low-hanging analysis left to do to find better solutions. I may take up this question in a later piece.